MUMT 307 - Final Project - Winter 2015

Marc Jarvis - Simulating the Reactable in Unity

Summary:

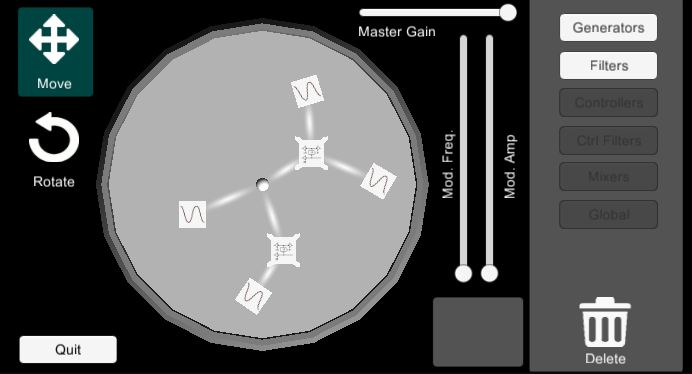

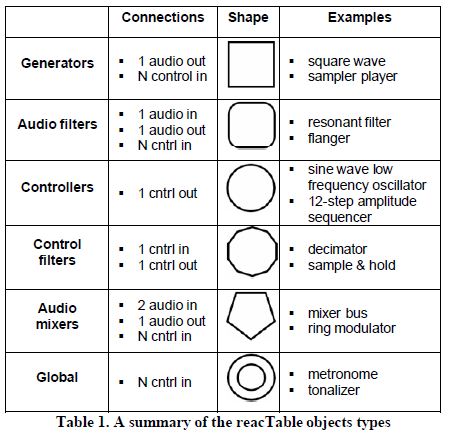

The Reactable is a tactile audio synthesizer in which the placement and rotation of objects on a surface produce an interesting sound composition that can be updated in realtime with user interaction.

A software version of the application already exists, but I was interested in creating my own basic version of the application in Unity for the following reasons:

- Unity is a popular and free videogame engine that is used in both high-end and independent game companies. However, the use of audio appears to focus primarily on imported audio clips and, from what I have seen, the use of procedurally generated sound within the engine is still in its infancy.

- As part of my personal education, I wanted to practice programming some of the audio synthesis techniques seen in class from the ground-up to really understand the effects each parameter creates.

- I am interested in a system where each component in the signal can be swapped out and manipulated in real time and a train of components combine together to build a final sound.

My primary focus with this project was the synthesis of different audio components and the ability to train objects together into a single, easily-manipulated sound.

Current approaches:

Thanks to the popularity of Unity and because of it's use of common programming languages (C# and javascript), many open-source libraries have been built for the engine. For example, it is very easy to find scripts to enable Open Sound Control (OSC) specifically for Unity. With this, messages can be sent between other software, such as Max/MSP and Pure Data. Unity also allows for native plug-ins, and so using libraries such as the Synthesis ToolKit (STK) should work very well for data and sound manipulation. The learning pages within the main Unity website also promotes the use of real-time audio synthesis using SuperCollider.

My approach:

Despite these available tools, I was keen to do everything from the ground up, completely within Unity because:

- I wanted practice coding the synthesis algorithms in a language other than Matlab.

- I was generally curious about how Unity stores and manipulates audio.

- This is a great project in practicing complex software development, primarily focusing on creating object interfaces that can be easily extendable to allow for new objects to be created in the future and to still interact with objects of different types.

Making Of:

The current build can be played in the web-player or downloaded here(8 MB): Windows, Macintosh, Linux.

The audio produced by a generator has its gain manipulated by the how close it is with the center and the frequency is controlled by how many degrees it has been rotated from its original position (this can go beyond 360). Certain objects, such as the AM and FM generators have additional attributes which the sliders provide access to. Of note, the Pulse object is more of an experiment. It is simply a Sine Generator that only sends a signal when pressed, and I hope to develop it into a sequencer controller at a future date. The Flanger is a little difficult to hear and further work needs to be applied to flesh it out more, but I have left it into the build to should how connections work. There is a bit of an influence found when attaching AM or FM generators to it.

In the original paper, the Reactable has 6 controller objects. Due to time constraints, I only explored implementing two of the types: The Generator and the Audio Filter

The actual area where audio is produced is the 'On Audio Filter Read' method within a MonoBehaviour GameObject. It passes in a float array of 2048 elements and the channel integer to play on. Manipulating these float values, which range from -1 to 1, is what produces the audible signal we hear.

void OnAudioFilterRead(float[] data, int channels){

if(_generator != null ){

_generator.Generate(data, channels);

}

}

The bulk of the audio generation exists within interface and abstract classes of its object type. Here's a section of code for creating sounds produced by the Generator objects to help illustrate the implementation (some adaptations from code found here: http://forum.unity3d.com/threads/onaudiofilterread-question.170273/).

public interface GeneratorInterface{

void Generate(float[] data, int channels);

void ConnectTo(AbstractFilter filter); //Data will flow out into the next filter

void Disconnect();

void SetFrequency(double frequency);

double GetFrequency();

void SetGain(double gain);

}

public abstract class AbstractGenerator : GeneratorInterface{

protected AbstractFilter _outputFilter;

protected double _frequency = 440;

protected double _gain = 0.05;

protected double _omega;

protected double _phase;

protected double _omega_mod;

protected double _phase_mod;

protected double _samplingFrequency = 48000;

protected GeneratorType _type = GeneratorType.NOISE;

public abstract void Generate(float[] data, int channels);

public void ConnectTo(AbstractFilter filter){

_outputFilter = filter;

}

public void Disconnect(){

_outputFilter = null;

}

public void SetFrequency(double frequency){

_frequency = frequency;

}

public double GetFrequency(){

return _frequency;

}

public void SetGain(double gain){

_gain = gain;

}

}

public class Generator_Noise : AbstractGenerator{

public override void Generate(float[] data, int channels){

System.Random rand = new System.Random();

Sfor(int i = 0; i < data.Length; ++i){

Sdouble x = rand.NextDouble()*2 - 1.0;

data[i] = (float)(x * _gain);

}

if(_outputFilter != null){

_outputFilter.Filter(data, channels);

}

}

}

public class Generator_Sinusoid : AbstractGenerator{

public override void Generate(float[] data, int channels){

_omega = _frequency * 2 * Math.PI / _samplingFrequency;

float deltaTime = 0.02f;

for (var i = 0; i < data.Length; i = i + channels){

_phase += _omega;

// this is where we copy audio data to make them “available” to Unity

data[i] = (float)(_gain*Math.Sin( _phase )); //*(deltaTime*i)

// if we have stereo, we copy the mono data to each channel

if (channels == 2) data[i + 1] = data[i];

if (_omega > 2 * Math.PI) _omega = 0;

}

if(_outputFilter != null){

_outputFilter.Filter(data, channels);

}

}

}

public class Generator_FM : AbstractGenerator{

private double _mod_frequency = 0.0;

private double _mod_index = 1.0;

public override void Generate(float[] data, int channels){

// update increment in case frequency has changed

_omega = _frequency * 2 * Math.PI / _samplingFrequency;

_omega_mod = _mod_frequency * 2 * Math.PI / _samplingFrequency;

float deltaTime = 0.02f;

for (var i = 0; i < data.Length; i = i + channels){

_phase += _omega;

_phase_mod += _omega_mod;

// this is where we copy audio data to make them “available” to Unity

data[i] = (float)( _gain*Math.Sin( _phase + _mod_index*Math.Cos (_phase_mod)) );

// if we have stereo, we copy the mono data to each channel

if (channels == 2) data[i + 1] = data[i];

if (_omega > 2 * Math.PI) _omega = 0;

}

if(_outputFilter != null){

_outputFilter.Filter(data, channels);

}

}

public void setFrequencyMod(double frequency){

_mod_frequency = frequency;

}

public void setModIndex(double gain){

_mod_index = gain;

}

}

Future Work:

The obvious extension is to add more of the object types as well as improve the graphical interface. It would be fun to also display plots of the resulting output signal in its various forms (frequenciesand waveforms). Issues with the current build need to be addressed, primarily:

- A context-sensitive gui should appear for the different filters that require more parameters, such as IIR, to allow for more control of its feedback and feedforward coefficients.

- The FM and AM generators should become Audio Mixers, so that different generators can be passed in. As a thought, the closer generator would be the carrier, and the further would be the modulator. This would provide different sounds if a waveform other than sinusoid is used, and removes the need for an additional gui.

- The ability to toggle sound output from objects currently placed, would allow for greater ease in composition.

- Being able to save the current state of the table would allow for artists to keep their favourite sounds, and if interactions could be recorded, then playback would free it from being strictly a live performance.